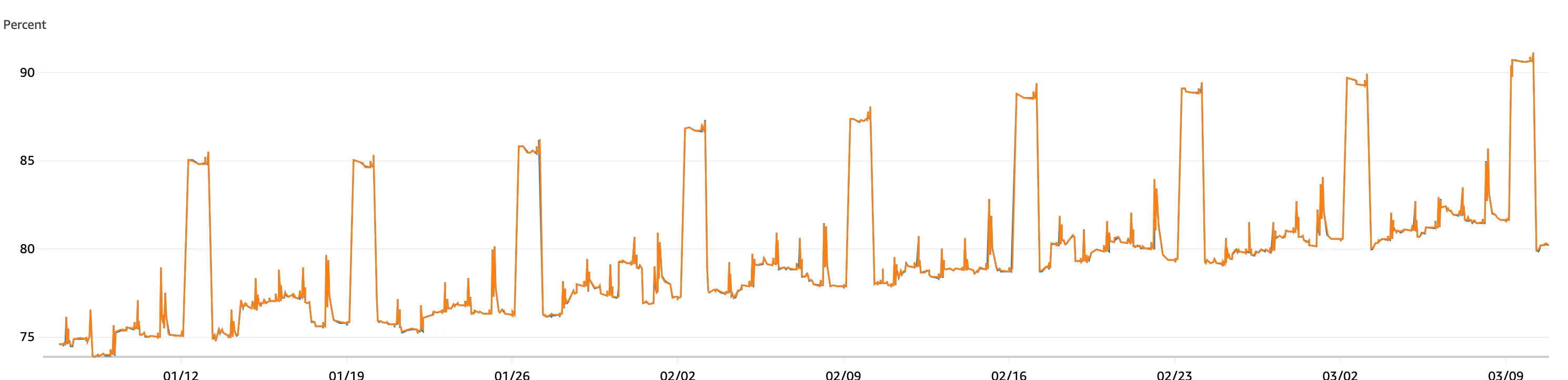

Recently, we’ve had a situation with a large Redis cluster for one of our core products. We’ve seen slowly increasing memory usage - memory would fluctuate over the course of a day, but the general floor or memory usage was slowly creeping up. We’d gotten around this a few times by just resizing the cluster, but this gets more and more expensive, and we decided to get to the bottom of it.

However - this posed a few challenges!

- The cluster was not publicly accessible, so we couldn’t use tools such as rma that would otherwise be useful.

- We’d also accumulated around 13 million keys, which also made it infeasible to use Redis Insights - which only supports up to 10,000 keys.

- Finally, the instance now also took up around 36GB in memory, which made it pretty much impossible to run locally for analysis.

The Solution

Luckily, Elasticache allows you to export an RDB file from the cluster. This is a much smaller (10GB in this case) file, and we can work with that locally.

There’s some useful (if slight out of date) documentation on how to do this - these are the steps we followed:

Create an S3 bucket to store the RDB file.

This is fairly straightforward - make sure you create the bucket in the same region as your cluster!

Grant ElastiCache access to the S3 bucket.

To do this, add a new External Account to the ACL for the bucket - you may have to switch Object Onwership to enable ACLs before you can do this.

You need to add a Grantee with the canonical ID mentioned in the docs above, and give it List and Write permissions on Objects and Bucket ACL.

Export the RDB file from a cluster backup

Handily, there’s now a quick way the AWS UI to do this - navigate to your most recent backup, click the Export button, select your S3 bucket, and click Export.

At this point, we have an .rdb file in our S3 bucket. We can grab this file and store it locally - unfortunately, it’s a binary format, so it’s not much use yet. rdbtools to the rescue!

RDBtools

RDB Tools is tool for working with Redis RDB files. It can do a lot of things, but we’re interested in the rdb -c memory command, which can read the RDB file and output a CSV file with the memory usage per key.

To do this, we need to install rdbtools - I had a few issues getting this to work locally on Python 3.12 - the easiest way for me was to create a virtual environment using pipenv and install from source.

pipenv install python-lzf

pipenv shell

git clone git@github.com:sripathikrishnan/redis-rdb-tools.git

cd redis-rdb-tools

python setup.py installOnce this is working, you can now process the RDB file:

rdb -c memory /path/to/your/dump.rdb > memory_report.csvThis generates a CSV file containing:

- Key names

- Memory usage per key

- Data types

- Key expiration information

Analyzing the Results

At this point, how you analyse the results is up to you. In our case, we identified a large set of keys without any expiries set, which had been building up for many months.

We were unfortunate to have key structures that didn’t lend themselves well to grouping - each one was fairly unique, so we had to do some fairly manual digging to find the culprits, with some regex fun to confirm our suspicions, and a command to run against the cluster to clear out some of the keys that were causing issues.

If you have more structured keys, you could at this point use something like pandas to group and analyse the results.

Hopefully this helps others avoid a few hours of fruitless searching in the future!